Introduction to AI: The Fundamentals of AI Unveiled

Before delving into the complexities of AI, this article will cover the fundamentals to help understand how it has been influencing businesses.

"AI might be a powerful technology, but things won't get better simply by adding AI."

- Vivienne Ming

AI news has burst out since the advent of ChatGPT. What is happening in this world? Nvidia joined the trillion club and replaced Netflix from the abbreviation of FAANG Companies (Facebook, Apple, Amazon, Netflix, Google) due to the surging demand for processors that power artificial intelligence (AI) applications. In this time of the AI Renaissance, AI will become a standard in the working environment after the huge wave of digital to AI transformation. Understanding the logistics and opportunities of AI and struggling with how to scale AI across their business will be key to successful alignment with the future.

Understanding AI

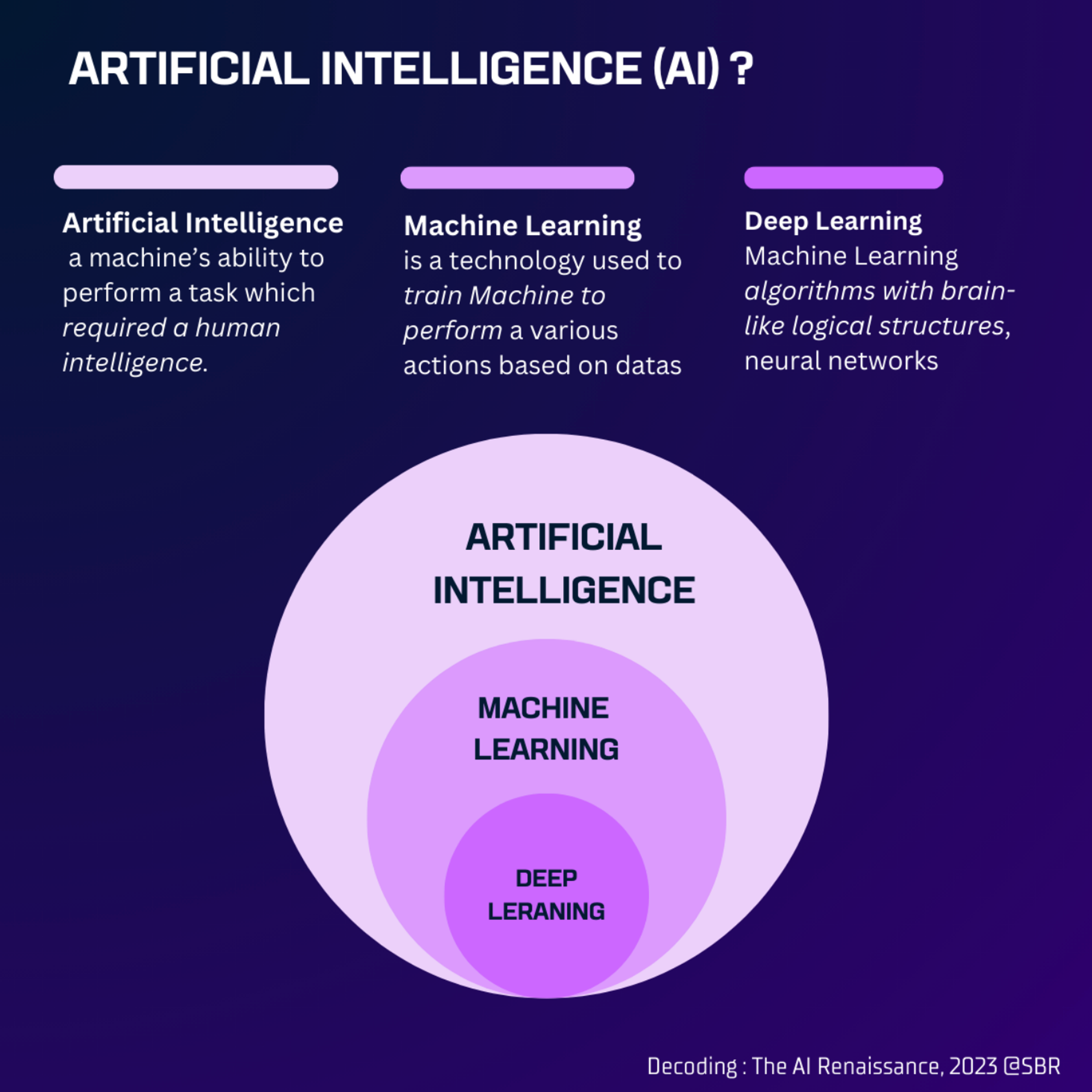

First, we need to answer this question: What is AI? AI is an abbreviation for Artificial Intelligence.

"a machine’s ability to perform the cognitive functions we usually associate with human minds." - McKinsey & Company

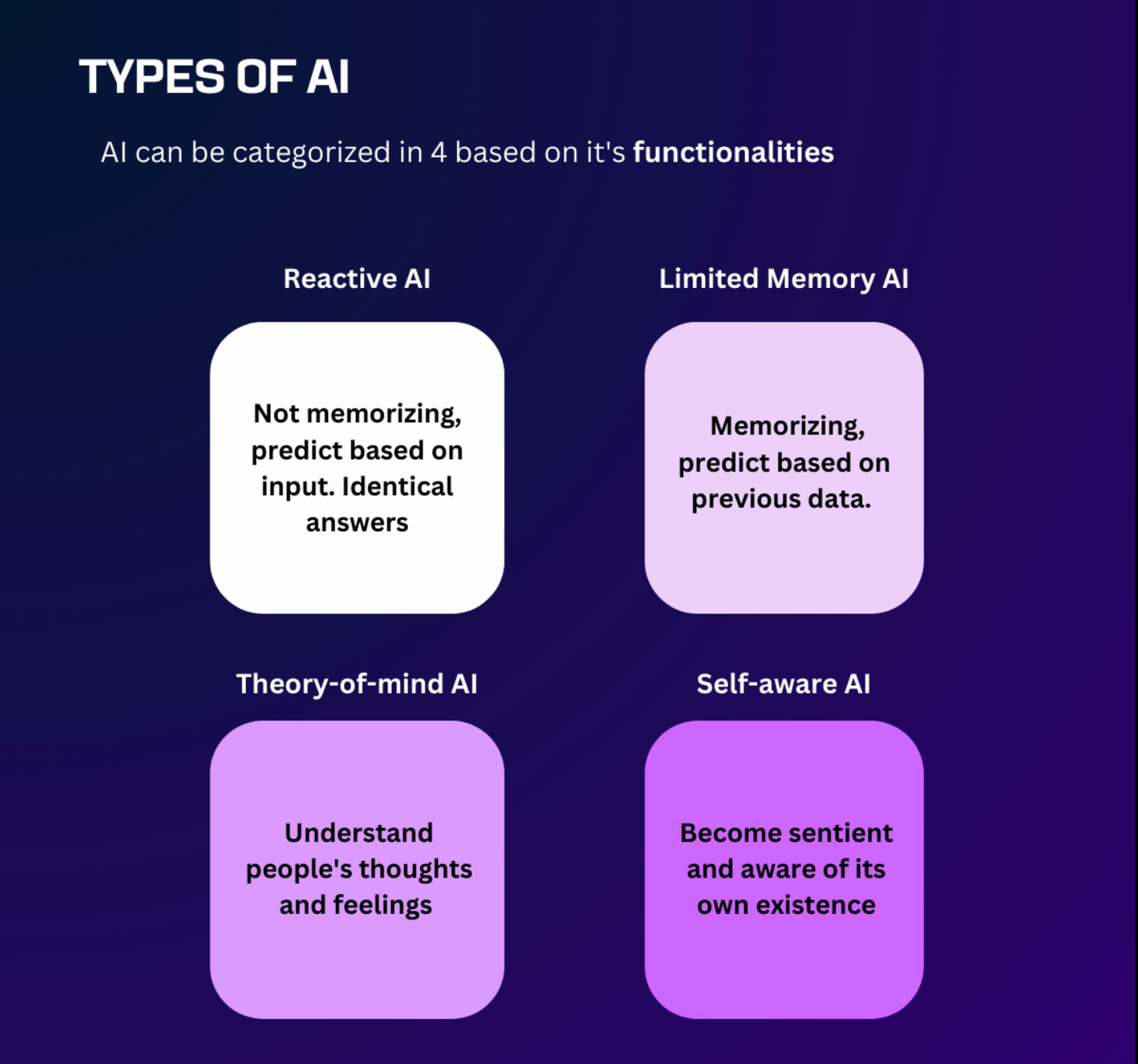

The concept of AI was first introduced in the 1950s, defined as a machine’s ability to perform a task that requires human intelligence. There are four types of AI categorized based on functionalities.

Reactive AI

- Reactive AI is programmed to produce output based on its input. It responds to identical situations in the same way, without being able to learn actions or conceive of the past or future.

Limited memory AI

- Limited memory AI stores previous data and predictions, using that data to make better predictions.

Theory-of-mind AI

- Theory of mind is the understanding that everyone has thoughts and feelings. In this case, machines will be required to have capabilities that are similar to humans.

Self-aware AI

- Self-aware AI is considered the most advanced AI. In apocalypse movies, humanoids start to awaken themselves, which is hypothetically assumed as 'self-aware AI.' It is a state where AI becomes sentient and aware of its own existence.

Core Concepts in AI

There are four core concepts you need to understand about AI: Machine learning, neural networks, natural language processing, and computer vision. Since AI is a comprehensive term for AI technologies, these concepts will enable you to categorize existing AI advancements.

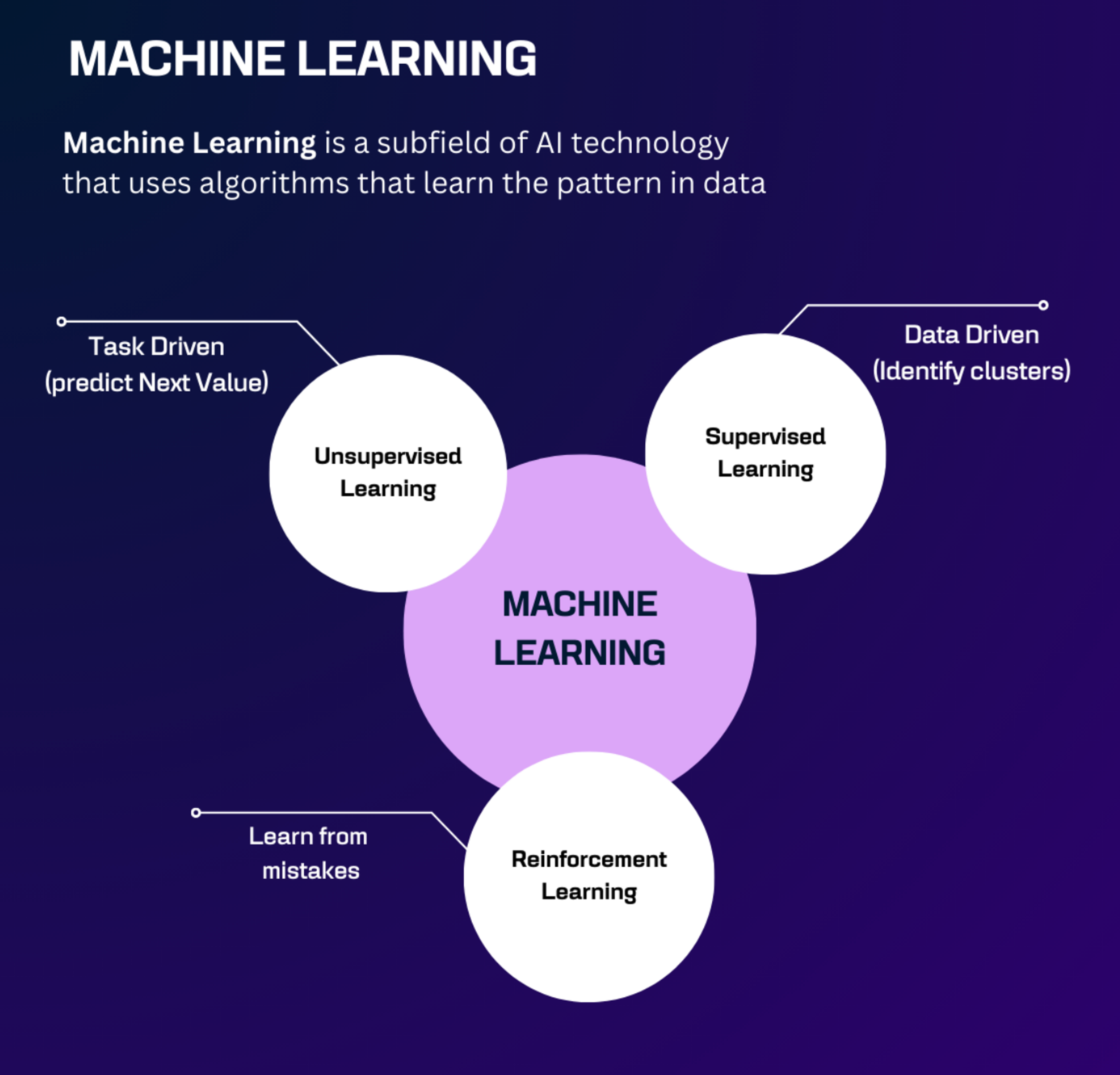

A. Machine learning

Machine learning is a technology used to train machines to perform various actions, such as predictions and recommendations, based on data or experience. There are three categories of machine learning algorithms.

1. Supervised learning

Supervised learning is an algorithm that learns from 'labeled training data' to help predict outcomes for unforeseen data. In this paradigm, both input and output data have correct labels. Supervised learning helps us predict future events based on past experience and labeled examples. Here are two methods that are part of supervised learning.

- Regression

- Regression is a model helpful for predicting numerical values based on different data points, such as sales revenue projections for a given business.

- Classification

- Classification is a model that uses an algorithm to accurately assign test data into specific categories, such as separating apples from oranges.

2. Unsupervised Learning

Unsupervised learning is an algorithm that analyzes and clusters unlabeled datasets, without known output. The training information is neither classified nor labeled; hence, a machine may not always provide correct output compared to supervised learning. It helps in exploring the data and can draw inferences from datasets to describe hidden structures from unlabeled data. Here are two methods that are part of unsupervised learning.

- Dimensionality Reduction

- Dimensionality Reduction is a technique used in the preprocessing data stage, such as when autoencoders remove noise from visual data to improve picture quality.

- Clustering

- Clustering is a technique that finds similarities in data points and groups similar data points together.

3. Reinforcement learning

Reinforcement learning is a feedback-based machine learning technique. In this type of learning, agents (computer programs) need to explore the environment, perform actions, and based on their actions, they receive rewards as feedback.

- Real-time decisions

- Game AI

- Robot Navigation

- Skill Acquisition

- Learning Tasks

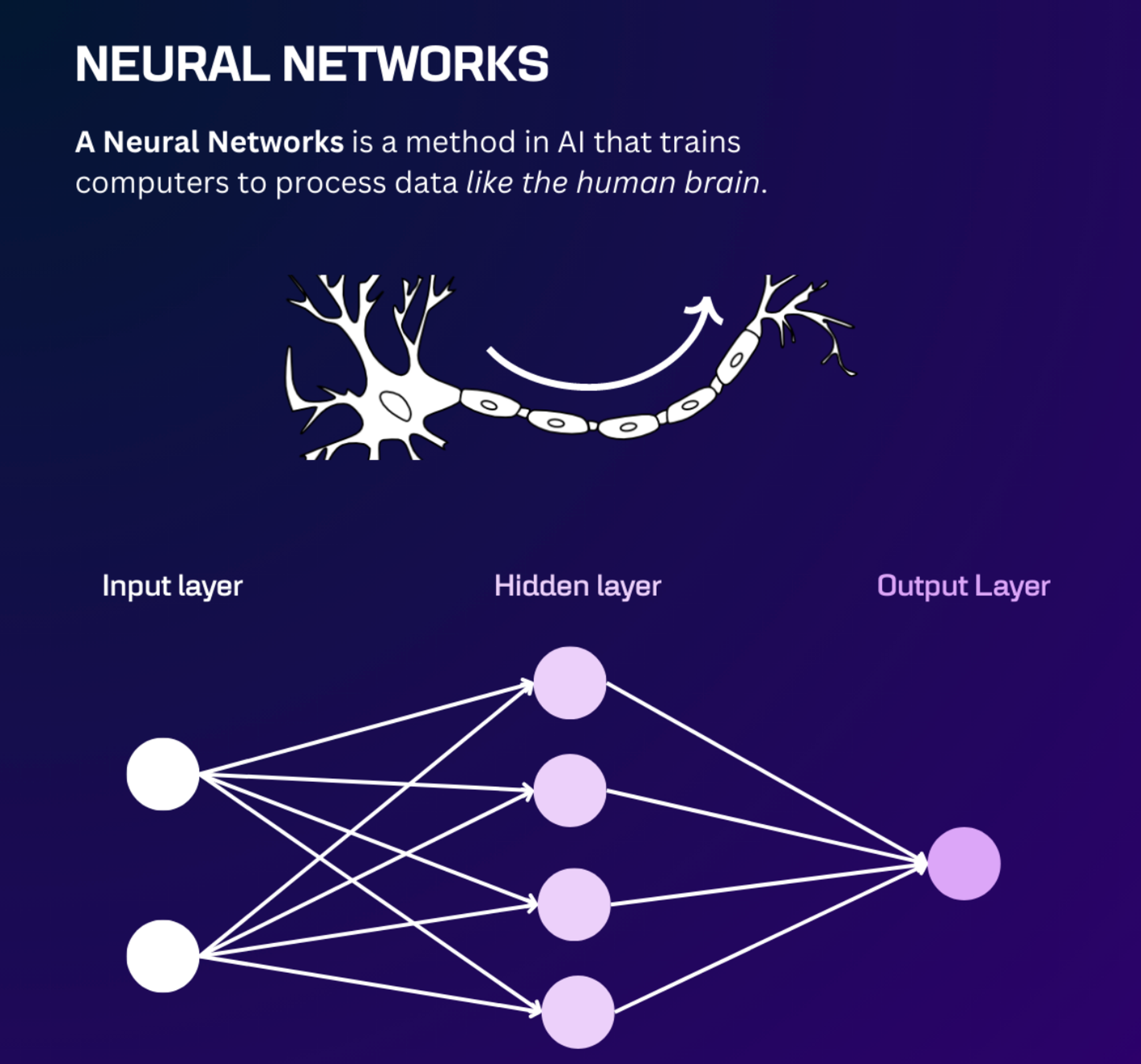

B. Neural Networks

Neural Networks is a method in artificial intelligence that teaches computers to process data in a way that is inspired by the human brain. It is a type of machine learning process that can be considered as deep learning, using interconnected nodes or neurons in a layered structure that resembles the human brain. Neural networks with several processing layers are known as "deep" networks and are used for deep learning algorithms.

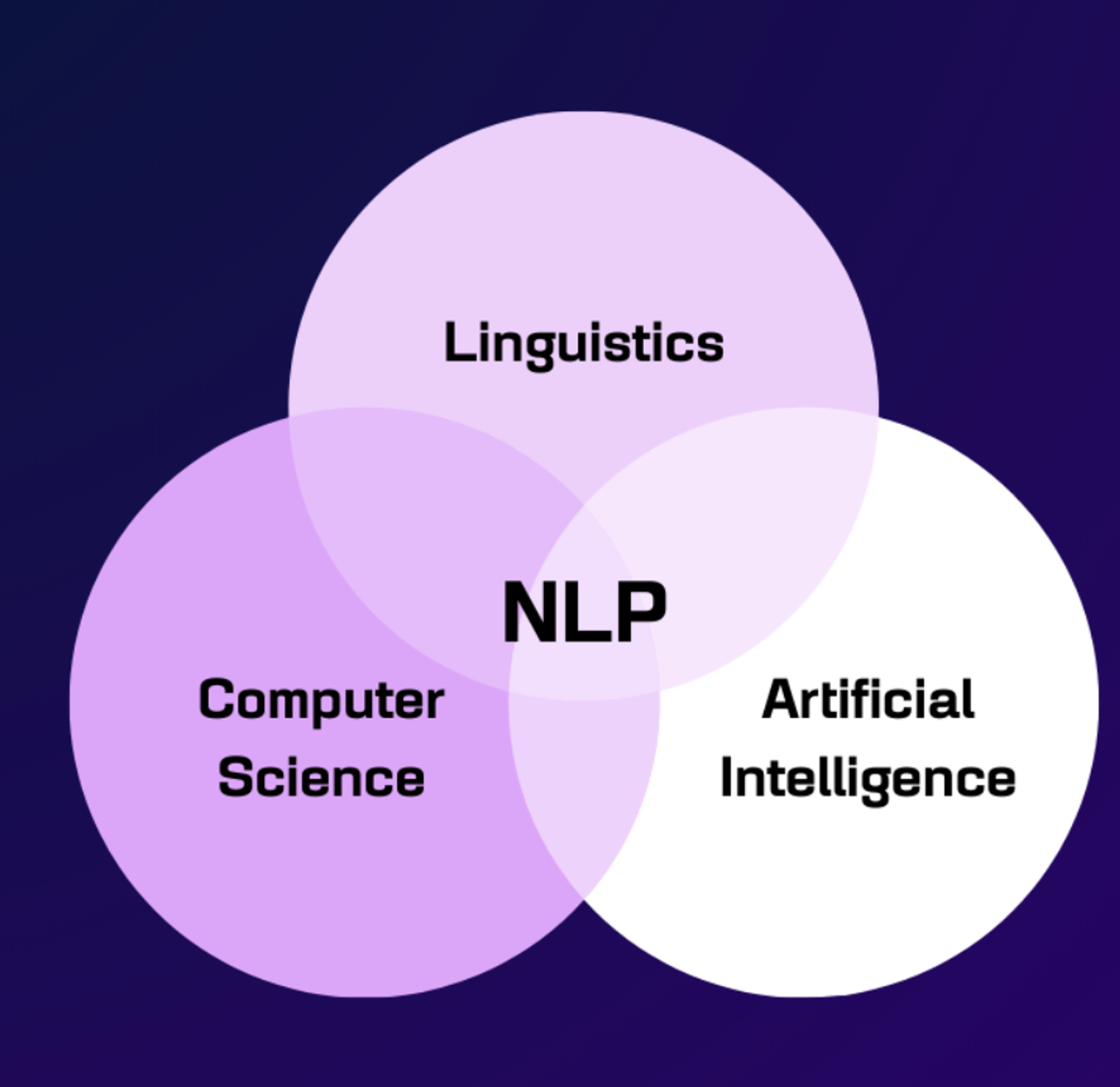

C. Natural Language Processing

The new competitor of Google, ChatGPT, is based on Natural Language Processing (NLP). NLP is a technology that allows computers to understand human language. Natural Language Generation (NLG) is a technology that allows computers to generate their own words. NLP has the ability to extract meaning from all textual information, so when you type your prompt into ChatGPT, it comes up with a proper response through NLP & NLG technology. In business, NLP has been used to build an automated AI office assistant, customer service, and many other communicative duties. Since NLP technology is easy to use in any language, the variety of usages in industries is unlimited and massive.

Here are some of the main tasks related to direct real-world applications of NLP:

- Speech recognition

- Sentiment Analysis

- Text-to-speech/image/video

- Word segmentation

- Machine translation: translatting ext from one human language to another

- Question answering

In May 2023, Chegg, known as a platform that helps students with their homework, saw its shares plunge 38% after ChatGPT's popularity grew. Unlike Chegg, which provides various answer options, ChatGPT gives an answer that has been processed through NLP, customizing the responses to your prompt by analyzing your text.

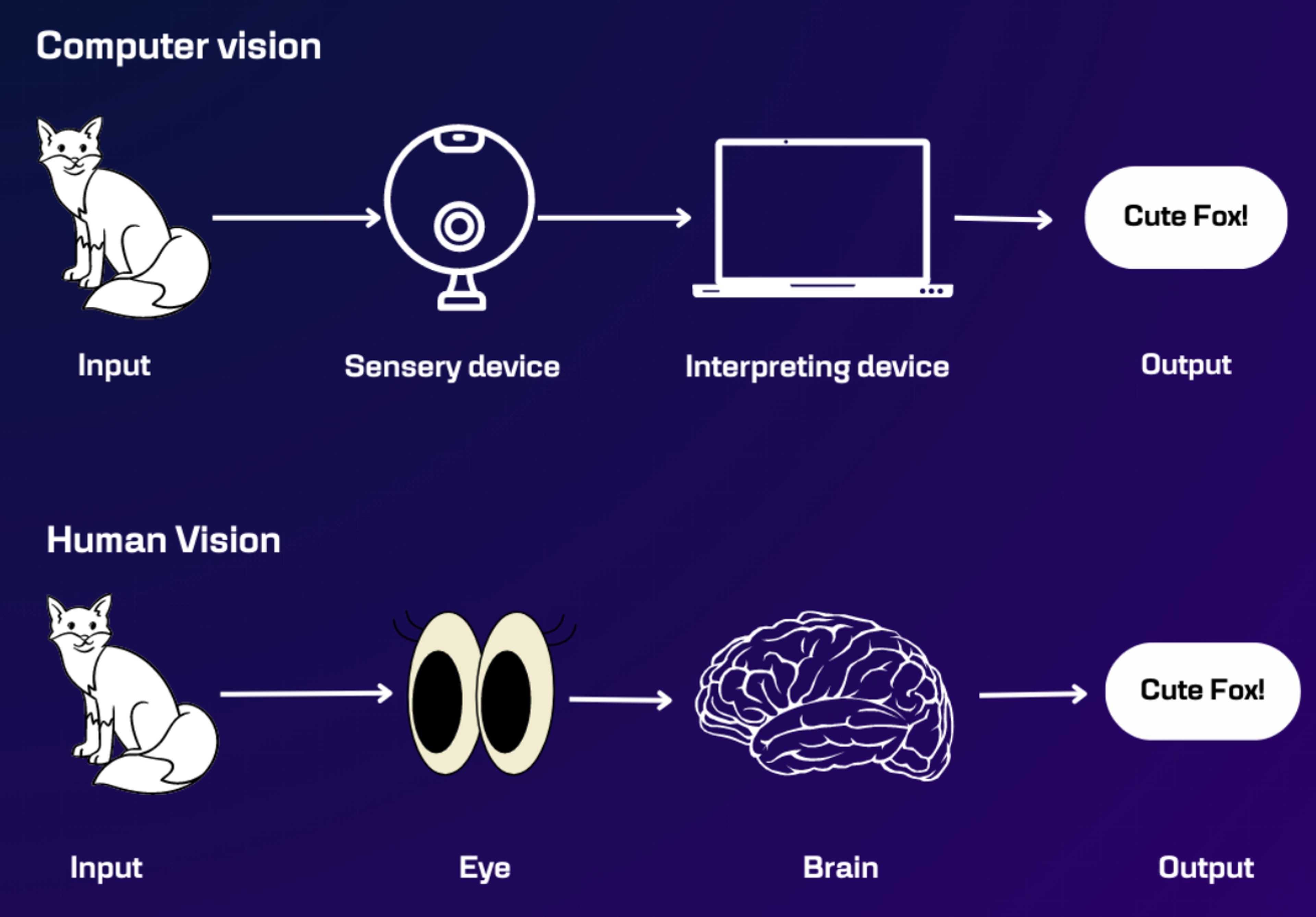

D. Computer Vision (CV)

Computer Vision (CV) is a field of AI that trains computers to capture and interpret information from image and video data. It focuses on identifying and understanding objects and people in images and videos. Computer vision is utilized in a way that imitates human vision.

- First, a sensing device captures an image, and the images are sent to an interpreting device.

- Second, deep learning models automate much of this process, but the models are often trained by first being fed thousands of labeled or pre-identified images.

- Third, the object is identified or classified.

Computer vision plays an important role in AR/MR, facial recognition, and self-driving cars.

Conclusion

We have explored the fascinating field of Artificial Intelligence (AI) and its various subfields. Here is a recap of the key points discussed in the article:

AI encompasses a wide range of technologies and techniques that aim to create intelligent systems capable of performing tasks that typically require human intelligence.

Looking ahead, the future of AI appears promising. As technology continues to evolve, AI will likely become more integrated into our lives, revolutionizing industries such as healthcare, transportation, and finance. AI-driven innovations such as autonomous vehicles, personalized medicine, and smart cities hold the promise of creating a more efficient and interconnected world.

n conclusion, AI is a transformative technology that has the potential to revolutionize various aspects of our lives. Embracing AI and exploring its capabilities will be crucial for individuals and organizations to stay at the forefront of innovation in the years to come.

Works Cited

- Neural Network - Investopedia

- Supervised vs. Unsupervised Learning: Understand the Differences - IBM Cloud Blog

- How Companies Are Already Using AI - Harvard Business Review

- Artificial Intelligence Tutorial - Guru99

- Artificial Intelligence (AI) - Investopedia

- What Is AI? - McKinsey & Company

- A Brief History of Artificial Intelligence - Harvard Medical School - Science in the News

- Computer Vision - IBM

- 7 Types of Artificial Intelligence - Forbes